Testing

Automated testing is an essential component of how the team develops and delivers robust software applications. Each time a new feature is added or a line of code is modified, developers write new tests or modify existing ones to validate the implementation.

Individual tests are grouped in test suites which are continuously executed each time new code is pushed to the code repository as part of a Continuous Integration pipeline.

Testing Strategy

Developers are responsible to write automated tests to ensure that all use cases of an application are working correctly. To ensure that the team writes the right tests, the team follows a testing strategy based on the following principles:

- Prioritize practicality over dogmatism.

- The confidence gained from the test suite should be worth the effort put into it.

- Revisit the testing requirements on each project and at each stage of the project.

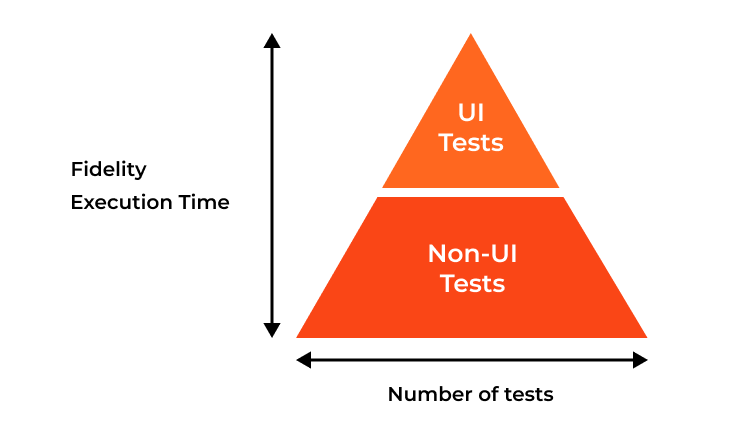

Test Pyramid

At a high level, the team recommends writing tests that cover the following types of tests:

Inspired by the Test Pyramid, the team recommends writing many low-level tests, i.e., grouped under the umbrella of non-UI tests, and fewer high-level tests, which are commonly called UI tests (or end-to-end tests). The rationale is that non-UI tests are faster to execute by a significant order of magnitude, thus providing a faster feedback loop. Nonetheless, UI tests are essential to test the user’s journey and ensure that the application is working as expected from the user’s perspective.

However, the team does not recommend following the Test Pyramid dogmatically, as initially proposed and documented. Notably, the team’s testing pyramid does not make a distinction between unit tests and integration tests. The terminology for each aforementioned test type is not standardized, carries different interpretations depending on the platform and stack, and, worse, can lead to inefficiencies. For simplicity and practicality, the team groups unit and integration tests under the umbrella of non-UI tests.

The second key difference from the original Test Pyramid is that the differentiation criteria between each type of test are limited to execution time and fidelity since these are the sole widely recognized factors. Other factors, such as the cost of writing and maintaining tests, vary too significantly depending on the platform, stack, and project, hence cannot be generalized, e.g., writing UI tests for a JavaScript application can be significantly easier than writing UI tests for a native mobile application.

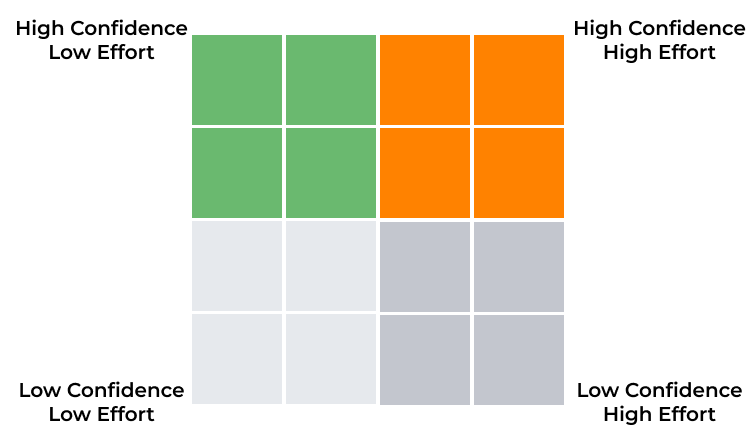

Test Matrix

Developers must always factor in two dimensions when writing tests: the confidence gained from the test, and the effort required to write and maintain the test. The team recommends writing tests that provide the most confidence for the least amount of effort. The following illustrates the team’s test matrix:

Depending on the platform, stack, and testing tools, both non-UI and UI tests might fit in any of the quadrants. However, as a rule of thumb, the team must aim to write tests that fit in the top quadrants of the matrix as they bring the most confidence. In contrast, the team must avoid writing tests that fit in the bottom quadrants of the matrix.

Ideally, tests that fit in the top left quadrant are the best. However, unavoidably, some critical tests might fit in the top right quadrant, hence must be implemented. In either case, the team must always ensure that the time investment is worth it. The ROI is measured in the confidence gained from the test. If the same confidence can be gained by writing a test that requires less effort, then the team must opt for the latter.

Adaptive Strategy

The team must adapt the testing strategy to the project’s needs and constraints (namely the platform, stack, and testing tools) and the feature’s criticality. The following illustrates the team’s adaptive testing strategy:

- Define a standard test strategy (baseline) per project.

- For each feature, assess:

- The need to deviate or not from the baseline testing strategy for the project.

- The required confidence (based on how critical the feature is).

- The effort spent on testing.

Baseline Definition

The baseline testing strategy is defined at the beginning of each project and is based on the platform, stack, and testing tools. The baseline testing strategy is the default testing strategy for the project. The following are examples of baseline testing strategies:

| Development Type | Platform | Stack | Testing Tools | Industry | Testing Strategy |

|---|---|---|---|---|---|

| Greenfield | Web | Ruby | RSpec, Capybara | Marketing | Non-UI tests: 80% UI tests: 50% |

| Greenfield | Web | Ruby | RSpec, Capybara | Finance | Non-UI tests: 100% UI tests: 100% |

| Greenfield | Web | JavaScript | Jest, Cypress | eCommerce | Non-UI tests: 75% UI tests: 100% |

| Brownfield | Web | JavaScript | Jest, Cypress | eCommerce | Non-UI tests: 50% UI tests: 80% |

This baseline should also be revisited at any time during the project’s lifecycle. For example, the team might decide to write more UI tests at the beginning of a brownfield development project to ensure that the user journey is working as expected as refactoring takes place. However, as the project matures, the team might decide to write fewer UI tests and more non-UI tests to ensure that the core business logic is working as expected.

Feature-based Assessment

For each feature, the team must assess the need to deviate or not from the baseline testing strategy for the project. The following illustrates the team’s feature-based assessment:

The assessment is usually based on the following criteria:

- How critical the feature is to the business. For example, the payment processing feature of an eCommerce application is more critical than the product return process.

- How much a bug would cost the business. For example, a bug in the checkout process of an eCommerce application would cost more than a bug in the product review functionality.

- How mature the feature is. For example, a feature that is still in the ideation phase should not be tested as thoroughly as a feature that is in its tenth iteration.

Best Practices

General

-

Not over-engineering tests.

To have maintainable test suites, tests need to be written as a series of simple procedures that a human can read. If a test fails, it should be pretty obvious why and fixed in a short amount of time. Many test frameworks come with complex domain-specific language (DSL) and convenience methods. But the team prefers focusing on the true essence of testing (i.e., the AAA pattern, i.e., Arrange - Act - Assert) over complex and non-standard setups.

-

Each test must be executed in isolation from other tests.

Flaky tests are often the offspring of tests over-sharing fixtures and setup. Instead, each test must set up its fixtures and not inherit or rely on any previous states. While it might result in code repetition, it is an acceptable trade-off for stable tests. The team recommends using sparingly the

beforeandafterhooks to set up and tear down fixtures. -

Use factories to create test fixtures.

Factories are a convenient tool to create reusable test fixtures and avoid inherent code duplication in tests. The team recommends using factories for complex objects, such as domain models, but not for simple objects, such as strings or numbers, which can be inlined into each test.

-

Use mocks and stubs for efficiency, not to classify a test as a unit or integration test.

Mocks and stubs are tools to increase the stability of tests and optimize the test suite execution speed. However, like any tool, they come with trade-offs. First, they require tests to know too much about the implementation details of the code under test, which is an anti-pattern since changing the implementation details will require changing the tests even though the feature did not change intrinsically. Thus, mocking and stubbing can yield high maintenance costs. Second, they can lead to false positives or negatives due to the test isolation, i.e., failing for the wrong reasons or not failing when they should. Therefore, the team primarily uses mocks and stubs to isolate the code under test from external services, such as payment gateways, but not to isolate the code under test from internal services, such as databases, unless it provides a significant gain in stability or test execution speed.

-

Enforce test coverage checks at the pull request level.

Regardless of the development type, the team must enforce test coverage checks to ensure that the right amount of tests, both UI and non-UI, are written and the overall test coverage does not drop below a certain threshold. The threshold is defined by the team as part of the baseline testing strategy.

-

Continuously monitor the total runtime of test suites.

Having long-running test suites decreases the productivity of developers and the effectiveness of tests. Having a short feedback loop is paramount. As the test suites grow, the team must break them down into several jobs and execute them in parallel.

-

Remove old or deprecated tests.

Do NOT be afraid to remove tests. As the software application evolves, some tests, while still passing, might add little value to the overall suite as newer tests already perform the same validations.

Greenfield Development

-

Write non-UI and UI tests from day one.

While the general tendency is to write non-UI tests first and UI tests later, the team recommends writing both non-UI and UI tests simultaneously. Non-UI tests support validating backend functionality, while UI tests support validating frontend functionality. Thus, writing both types of tests simultaneously ensures that the team is validating the feature from both ends. There is no technical limitation to this practice as long as the codebase has an adequate testing setup, which comes out of the box with all the team’s project templates.

-

Prioritize happy paths over edge cases.

Happy paths are the most common user journeys, while edge cases are the less common ones. Thus, prioritizing happy paths ensures that the most common user journeys are working as expected. For an early-stage project, happy paths matter the most. In addition, since a lot of the codebase is still in flux at the beginning of a greenfield development project, writing edge case tests is not efficient since they will likely break too early as the codebase evolves. The team recommends adding edge case tests once the project matures and the codebase stabilizes. Based on the test matrix, writing happy path tests qualify as lower effort and higher confidence than writing edge case tests.

Brownfield Development

-

Write UI tests first, when test coverage is low to non-existent.

When the team is working on a brownfield development project, it is common to have low-single-digit to non-existent test coverage. Writing non-UI tests is usually not efficient in this case since the codebase is not designed to be testable. Based on the test matrix, writing UI tests qualify as high effort and high confidence, while writing non-UI tests as high effort and low confidence. Thus, the team recommends writing UI tests first as the most efficient strategy to ensure that the various user journeys continue working as expected as the code is being changed and refactored to make way for new features.