Automated testing is an integral part of the software development lifecycle at Nimble. In practice, it means that we write tests for every part of the codebase, thus keeping the test coverage as close to 100% as possible. This results in large test suites over time. We will share the tips and tricks we have used to ensure the test suite remains fast and stable. Although Elixir is used for demonstration, similar techniques can be applied to other programming languages.

In this article, we will cover two major problems:

1. Flaky Tests

2. Slow Test Suite

Grab a coffee, and let’s explore what they are and how to solve them.

What is a Flaky Test?

If a test result is inconsistent despite no code changes or test changes, it is called a Flaky Test. For example, the test fails when updating an unrelated README file.

How Do Flaky Tests Affect the Team?

Before merging changes, all tests must pass the test suite on the Continuous Integration (CI) server. If the tests randomly fail, it becomes a blocker rapidly. Indeed, we cannot merge the changes on time, affecting the developer’s productivity as they have to re-run the test and wait. Besides, every failure requires at least another CI pipeline execution; this increases the CI costs too.

Common Causes of Flaky Tests

1. Unique Data

For example, the user email needs to be unique in the application. Still, when creating test data, the randomization algorithm could be not robust enough, and we could end up with a duplicated email.

Solution

Use a strong randomization mechanism; prefer to use the sequence method in ExMachina. See more techniques on Generating Test Data in Phoenix application using ExMachina & Faker.

def user_factory do

%MyApp.User{

email: sequence(:email, &"email-#{&1}@example.com"),

}

end

In some cases, we might need to have duplicated data in the test suite. Below is an example:

# test 1

defmodule MyApp.Module1Test do

use MyApp.DataCase, async: true

describe "..." do

test "..." do

...

btc = insert(:coin, symbol: "BTC")

...

end

end

end

# test 2

defmodule MyApp.Module2Test do

use MyApp.DataCase, async: true

describe "..." do

test "..." do

...

btc = insert(:coin, symbol: "BTC")

...

end

end

end

In these cases, the rule of thumb is to execute these tests in synchronous mode:

# test 1

defmodule MyApp.Module1Test do

@moduledoc """

This test needs to run as `async: false` because it contains unique data `BTC`

"""

use MyApp.DataCase, async: false

describe "..." do

test "..." do

...

btc = insert(:coin, symbol: "BTC")

...

end

end

end

# test 2

defmodule MyApp.Module2Test do

@moduledoc """

This test needs to run as `async: false` because it contains the unique data `BTC`

"""

use MyApp.DataCase, async: false

describe "..." do

test "..." do

...

btc = insert(:coin, symbol: "BTC")

...

end

end

end

2. Database Ordering

Given a list all users feature and associated test.

defmodule MyApp.Users do

def list_all, do: Repo.all(User)

end

defmodule MyApp.UsersTest do

use Marketplace.DataCase, async: true

alias MyApp.Users

describe "list_all/0" do

test "returns all users" do

first_user = insert(:user)

second_user = insert(:user)

assert Users.list_all() == [first_user, second_user]

end

end

end

As we do not specify the order in the Repo.all command, this test could fail randomly because by default, PostgreSQL does not order by ID.

So the result could be either [first_user, second_user] or [second_user, first_user].

Solution

Do not assert the order if it is not needed:

defmodule MyApp.UsersTest do

use Marketplace.DataCase, async: true

alias MyApp.Users

describe "list_all/0" do

test "returns all users" do

first_user = insert(:user)

second_user = insert(:user)

assert length(Users.list_all()) == 2

end

end

end

If the order is necessary, we need to create the test data accurately.

defmodule MyApp.Users do

def list_all do

User

|> order_by(asc: :name)

|> Repo.all()

end

end

defmodule MyApp.UsersTest do

use Marketplace.DataCase, async: true

describe "list_all/0" do

test "returns all users ordered by ascending name" do

_david = insert(:user, name: "David")

_andy = insert(:user, name: "Andy")

assert [%User{name: "Andy"}, %User{name: "David"}] = Users.list_all()

end

end

end

3. Test Data

Given a user factory and a test for a search user by name feature.

# user_factory.ex

def user_factory do

%User{

name: Faker.Person.name(),

}

end

andy = insert(:user, name: "Andy")

ana = insert(:user, name: "Ana")

_another_user = insert(:user)

_also_another_user = insert(:user)

assert length(Users.search_user_by_name("An")) == 2

This test could fail randomly because the auto-generated name by the Faker library in _another_user = insert(:user) or _also_another_user = insert(:user) could also contain the An term in their name. For example, if the _another_user has the name Anakin the test will fail.

Solution

Making sure the test data is accurate to the test case, to make the test above stable, we could change it to:

andy = insert(:user, name: "Andy")

ana = insert(:user, name: "Ana")

_another_user = insert(:user, name: "David")

_also_another_user = insert(:user, name: "Teddy")

assert length(Users.search_user_by_name("An")) == 2

4. Un-frozen Date/Time

Given the following method:

defmodule MyApp.TimeHelper do

def morning?() do

current_time = NaiveDateTime.utc_now() |> NaiveDateTime.to_time

current_time.hour < 12

end

end

defmodule MyApp.TimeHelperTest do

alias MyApp.TimeHelper

describe "morning?/0" do

test "returns true" do

assert TimeHelper.morning?() == true

end

end

end

This test will not pass in the evening, because the value will be false at that time. It is not deterministic.

Solution

When working with DateTime, prefer to stub the current time. Mimic is a great tool.

defmodule MyApp.TimeHelperTest do

alias MyApp.TimeHelper

describe "morning?/0" do

test "given the current time is in the morning, returns true" do

expect(NaiveDateTime, :utc_now, fn -> ~N[2021-01-15 08:00:00.000000] end)

assert TimeHelper.morning?() == true

end

test "given the current time is in the afternoon, returns false" do

expect(NaiveDateTime, :utc_now, fn -> ~N[2021-01-15 14:00:00.000000] end)

assert TimeHelper.morning?() == false

end

test "given the current time is in the evening, returns false" do

expect(NaiveDateTime, :utc_now, fn -> ~N[2021-01-15 20:00:00.000000] end)

assert TimeHelper.morning?() == false

end

end

end

5. Using Application.put_env In Asynchronous Tests

In Elixir, it is possible to configure the app through the Config module and read it with Application.get_env.

For example, given a MockUser module to avoid the external HTTP request in the Test environment.

defmodule MyApp.Account.UserBehaviour do

@callback find_by_id!(user_id :: term) :: user :: term

end

defmodule MyApp.Account.UserMock do

@behaviour MyApp.Account.UserBehaviour

alias MyApp.Account.Schemas.User

def find_by_id!(user_id), do: %User{id: user_id}

end

defmodule MyApp.Account.User do

@behaviour MyApp.Account.UserBehaviour

def find_by_id!(user_id), do: Http.get!("external_api", user_id)

end

defmodule MyApp.Users do

def find_by_id!(user_id), do: user_resource().find_by_id!(user_id)

defp user_resource(), do: Application.get_env(:my_app, :user_resource)

end

# config/config.exs

config :my_app, :user_resource, MyApp.Account.User

# config/test.exs

config :my_app, :user_resource, MyApp.Account.UserMock

To test the User module we need to modify the configuration with Application.put_env.

defmodule MyApp.UsersTest do

use MyApp.DataCase, async: true

alias MyApp.Account.Schemas.User

alias MyApp.Account.User

setup_all do

Application.put_env(:my_app, :user_resource, User)

end

describe "find_by_id!/1" do

test "given a valid user ID, calls Account service and returns a User" do

# stub the external request

expect(Http, :get, fn _ -> %User{id: "user_1"} end)

assert %User{id: "user_1"} = Users.find_by_id!("user_1")

end

end

end

This test will always pass, but the other user’s tests could fail randomly because it is changing the configuration and running in asynchronous mode. Let’s say, at the same time, the other tests are also running and using the same configuration (User instead of MockUser). They will fail because they do not stub the external request (expect(Http, :get, fn _ -> %User{id: "user_1"} end)).

This implementation also makes the next tests — i.e. the ones following the current test, fail because they do not revert the configuration value.

Solution

Set the test run in synchronous mode, and revert the configuration on exit. We can add a comment to explain why this test cannot run with async: true.

defmodule MyApp.UsersTest do

@moduledoc """

This test needs to run as `async: false` because it contains the `Application.put_env`

"""

use MyApp.DataCase, async: false

alias MyApp.Account.Schemas.User

alias MyApp.Account.MockUser

alias MyApp.Account.User

setup_all do

Application.put_env(:my_app, :user_resource, User)

on_exit(fn ->

Application.put_env(:my_app, :user_resource, MockUser)

end)

end

describe "find_by_id!/1" do

test "given a valid user ID, calls Account service and returns a User" do

# stub the external request

expect(Http, :get, fn _ -> %User{id: "user_1"} end)

assert %User{id: "user_1"} = Users.find_by_id!("user_1")

end

end

end

6. Using ecto.sandbox Shared Mode In Asynchronous Test

We use Ecto.Sandbox in the test environment. Each test has a database transaction that is separated from the other tests.

But sometimes, the feature runs on many different processes. For example, a process creates the data and spawns ten processes to analyze that data concurrently.

To test that feature we need to config Ecto.Sandbox as shared mode. This way, we have only one database transaction across these processes, and the other process can now query the data.

A shared sandbox results in the data leaking from one test to another. This could make the test suite unstable in asynchronous mode, the other tests might use the same database and have weird data.

Solution

Run the test in synchronous mode if it requires the Sandbox shared mode.

defmodule MyApp.MyEndToEndTest do

@moduledoc """

This test needs to run as `async: false` because it uses the sandbox shared mode.

"""

use ExUnit.Case, async: false

describe "submits the Login form" do

test "..." do

# Main process: Create the test data

# Sub process: Open browser, visit login form.

end

end

end

7. Asserting on Exact Log Contents in Asynchronous Tests

When asserting the logs output with capture_log, if we use the exact assert (==) sometimes it does not match because the log could be emitted from anywhere in the app.

assert logs == """

log-information-1

log-information-2

"""

While the actual log output is

log-information-1

another-log-from-the-http-request

log-information-2

That test will fail because in the actual log output we also have another-log-from-the-http-request.

Solution

- Do not use the exact assert

==when asserting the logs. Prefer to useregexor the=~operator. - Run the test in synchronous mode.

defmodule MyApp.PurchasesTest do

@moduledoc """

This module needs to run as `async: false` because it ues capture_log

"""

use MyApp.DataCase, async: false

test "writes logs about Airtable service" do

logs =

capture_log(fn ->

{:ok, purchase_id} = Purchases.charge(user, product, params)

end)

assert logs =~ """

[info] Enqueued the AirtableRecordCreator Oban job for the purchase #{purchase_id}

.*

[info] Creating purchase #{purchase_id}

"""

end

end

8. Running All UI Tests Asynchronously on CI

Usually, the CI memory is limited. In asynchronous mode, each test will open a different browser instance, which could result in the CI running out of memory.

Solution

Reduce the asynchronous test in browser tests. Prefer to run them synchronously.

And How About a Slow Test Suite?

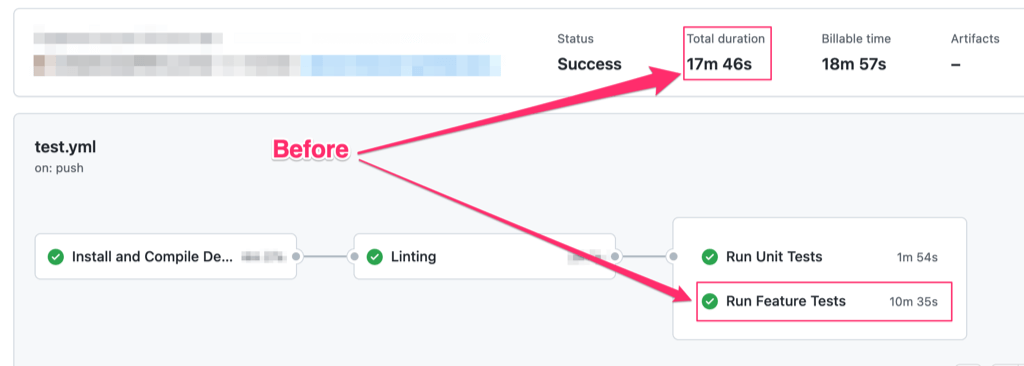

A slow test suite slows down the development process, from merging a new Pull Request to deployment, and also increases the cost of CI.

A slow test suite combined with the aforementioned flaky test issues results in a painful combo; imagine if the test suite takes 20 minutes to run and after waiting for 19 minutes, a flaky test appears at the last minute. We have to retry and wait for another 20 minutes.

Tips to Speed up the Test Suite

1. Keep the Power of Async Tests Whenever Possible

Thanks to Erlang and ExUnit library, we can easily make our tests run asynchronously by setting async: true to the ExUnit.Case.

defmodule MyApp.KeywordsTest do

use MyApp.DataCase, async: true

# Test codes

end

Check Understanding Test Concurrency In Elixir for more information.

But as mentioned above, we cannot set the test run asynchronously in some cases. It’s ok to run as async: false.

Rule of thumb:

-

Whenever possible, always run the tests concurrently.

defmodule MyApp.KeywordsTest do use MyApp.DataCase, async: true # Test codes end -

In cases where we cannot run the test asynchronously, explicitly state

async: falsewith a comment rather than leaving it blank.# Bad defmodule MyApp.KeywordsTest do use MyApp.DataCase # Test codes end # Good defmodule MyApp.KeywordsTest do @moduledoc """ This module needs to run as `async: false` because .... """ use MyApp.DataCase, async: false # Test codes end

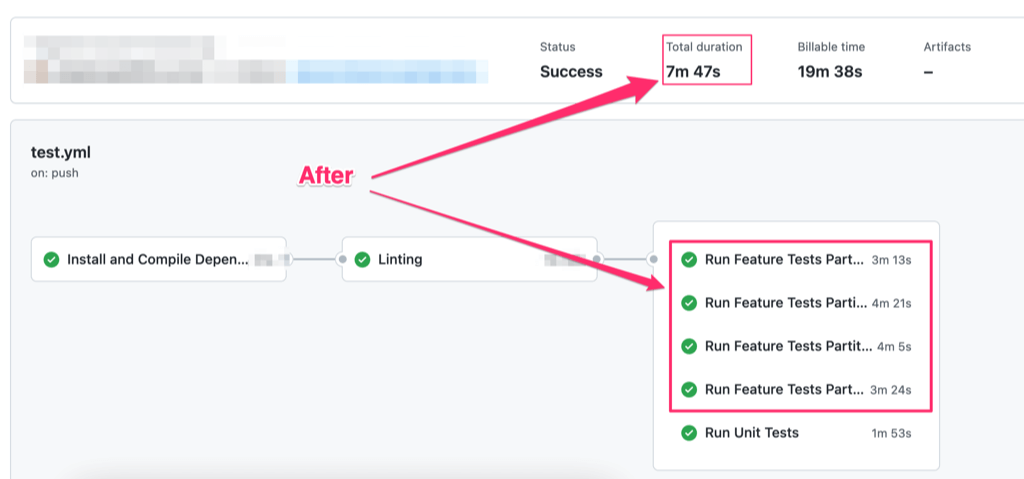

2. Use ExUnit Partitioning

Although ExUnit provides a way to run all test suites asynchronously as mentioned, they also provide another option to speed up the test suite by partitioning it.

ExUnit package has good documentation for this fantastic option.

We usually partition the system tests, so multiple jobs run the UI tests in parallel on the CI server.

name: Test

on: pull_request

jobs:

ui_test:

strategy:

matrix:

mix_test_partition: [1, 2, 3, 4]

...

- name: Run Tests

run: mix test --only ui_test --partitions 4

env:

MIX_TEST_PARTITION: ${ { matrix.mix_test_partition } }

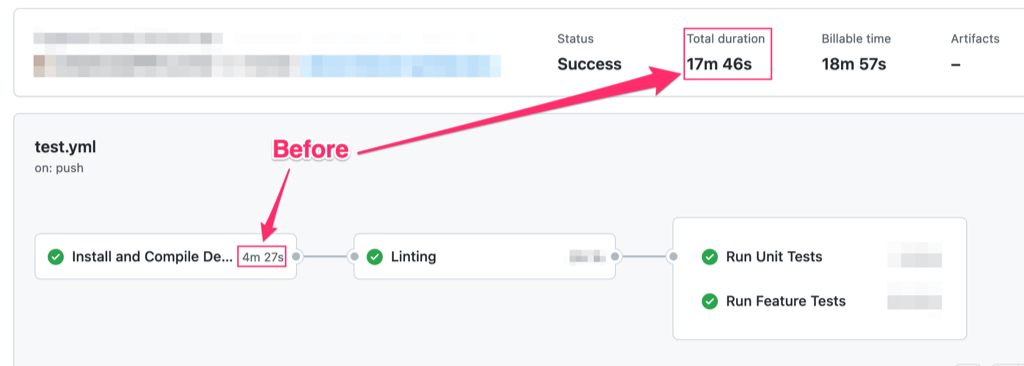

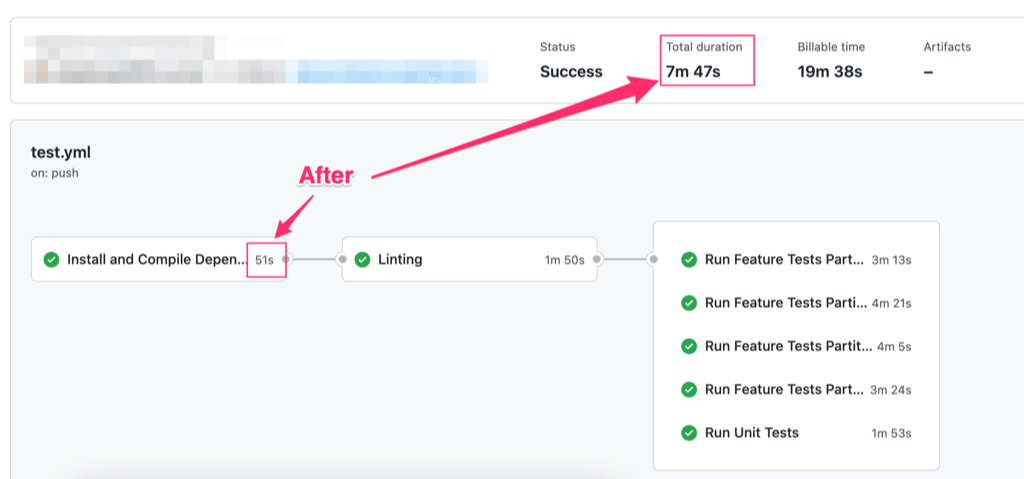

3. Use Cache on CI

Fetching, installing, and compiling the dependencies requires several minutes. As an example, a Phoenix Web project will need to:

- Fetch, install and compile all Elixir dependencies from hex.pm

- Fetch, install and compile all Node dependencies from npm

We can leverage the cache feature on most of CI providers to speed up these steps.

Below is an example of using cache on GitHub Actions.

name: Test

on: pull_request

jobs:

ui_test:

...

- name: Cache Elixir build

uses: actions/cache@v2

with:

path: |

_build

deps

key: ${ { runner.os } }-mix-${ { hashFiles('**/mix.lock') } }

restore-keys: |

${ { runner.os } }-mix-

- name: Cache Node npm

uses: actions/cache@v2

with:

path: assets/node_modules

key: ${ { runner.os } }-node-${ { hashFiles('**/package-lock.json') } }

restore-keys: |

${ { runner.os } }-node-

- name: Install Dependencies

run: mix deps.get

- name: Compile dependencies

run: mix compile --warnings-as-errors --all-warnings

- name: Install Node modules

run: npm --prefix assets install

...

4. Avoid Expensive Operations in the Test Environment

The operation in the development and production environment are quite complicated and take a lot of time to process. To reduce time and costs when running our tests, some libraries allow us to simplify the operation in the test environment.

For example, we can tweak the test suite speed when using the following library by adjusting the config/test.exs file.

-

# config/test.exs config :argon2_elixir, t_cost: 1, m_cost: 8 -

# config/test.exs config :bcrypt_elixir, :log_rounds, 4

Conclusion

So far, we can see the benefit of having a fast and stable test suite. Hopefully, this improves your test suite while reducing the friction for your team simultaneously!

If you are in need to build or manage your Elixir application, Nimble offers development services for Elixir and Phoenix.

Happy coding!